DATA Pre-processing for ML in 5 minutes...

DATA PRE-PROCESSING

Suppose, you are a chef and you have to feed three-person, Person A, Person B and Person C. Person A is Vegetarian, B is eggetarian, and C is non-vegetarian. Now, you have a bunch of ingredients, you have to make food for all of them, so you will arrange all the ingredients in all the above three categories as need. This is called Data preprocessing, we prepare it before feeding it to our Machine learning Algorithm.

|

| Image Source: Google Images |

Why need?

When we collect data from other resources, so whole data might be in not a format to insert it in our algorithm. So, we need to make both of them in the same rhythm. That's why we need data pre-processing.

How important?

Data pre-processing is a basic requirement, before working on it by ML Algorithm. Every algorithm wants a different type of arranged data, which best fit into it.

It directly affects the learning capability of Machine, it totally depends on our quality of data, which we are using to train our machine.

It always required to extract and process our data with useful information, so that so the quality of model trained will be the best in one.

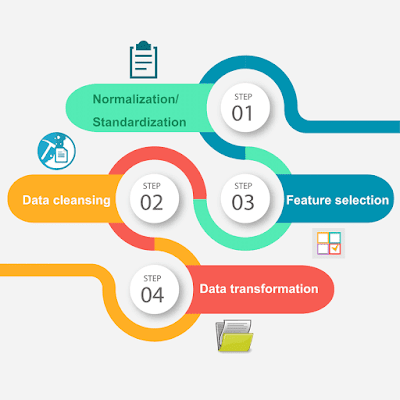

Data-Preprocessing Techniques:

There are various ways to do data preprocessing with different types of issues, I am discussing some of them...

1. Dropping Null Values-:

In every dataset, there can be null values, because we are taking data from the real world, so chances of having a null value in the dataset are valid. But there are issues with the null value, it doesn't matter what model are you using, but a problem will definitely be created there due to the null Values. So it always needs to process these Null values in Dataset.

--> Check where is null data. Use df.isnull() method to find the null values in the whole data frame.

--> After finding it you have two option to drop all null values or fill it with any other values. If you are dropping all null values in the data frame by using df.dropna(), then by default it will drop the whole row with at least on null values.

-->For dropping the whole column in data frame with latest one value you can use df.drop(axis=1). You can modify it as per your requirement, for more info refer the python documentation.

|

| Image Source: Google Images |

2. Filling the Null values-Imputation

When you are working with the large dataset then there must be a section which will be optional, so some will fill it or some will not fill it. In this case, we can replace the NULL values with our derived values or fixed values. This process is called imputation.

A class is provided in sklearn called SimpleImputer which can impute all of your values in the dataset. For more info refer the documentation.

from sklearn.impute import SimpleImputer

imputer = SimpleImputer(missing_values=np.nan, strategy='mean')

imputer = SimpleImputer(missing_values=np.nan, strategy='mean')

3. Standardisation

It is a technique to transform gaussian distribution to standard Gaussian distribution, with a mean of 0 and standard deviation. of 1.

For this, we have a special function in Sklearn which will do all for us in a single line.

For more info refer to the sklearn documentation

This blog will continue to the other method of pre processing the data for machine learning, so stay tuned.

Superb, Great work done. what I was looking for got it here thanks ;)

ReplyDelete